Author: Kaiwen Leong

Institution: Boston University

Date: April 2007

Abstract

The lowly fixed-point recursion xn+1 = f(xn), which is at the bottom of the iterative methods evolutionary ladder, should come before the Newton-Raphson method. Yet in calculus texts the latter takes precedence, due probably to the appeal of its plausible geometrical interpretation visualized so convincingly as sliding down tangent lines. But relying on graphs and pictures can lead to simplistic thinking.

Introduction

This paper deals with the solution of non linear equations of one unknown by iterative means. Since the solution of a nonlinear equation is mostly irrational and cannot very often be expressed even in terms of radicals such as square roots or other radicals it must be solved numerically in terms of decimal approximations. Such equations are for the greatest part solved iteratively by choosing a reasonable rational or decimal initial guess, then repeatedly improving it by some iterative procedure until the improved approximation is of sufficient accuracy---until convergence is achieved. It is in the nature of things that such iterative procedures are commonly nested within outer iterative loops that are typically repeated a huge number of times and it is therefore imperative that they be fast and converge in few iterative steps. This is a central issue in the mathematical discipline of numerical analysis and is worthwhile studying for theoretical as well as practical reasons. In fact, one of the commonest tasks mathematics is called to perform in its application to the sciences is the solution of the ubiquitous nonlinear equation. So much so that one of the earliest applications of calculus by both Newton (1643-1727), the inventor of calculus, and Halley (1656-1727), the famous astronomer, was to this important question of how to systematically and effectively solve a nonlinear equation, and keen interest in their iterative methods did not abate up to this day. See the list of papers in the References and the extensive discussions in the two books (14, 17). Thus, this mathematical topic is of both historical and current interest and any contribution made to it should be of wide interest.

This paper is not intended to be a historical review of the subject of Newton's method, and actually, any mathematical paper is based on the assumption that the interested reader is familiar with the state of the art. A reader interested in greater developmental details looks up the references. Still, in order to pursue the lines of thinking prevalent in mathematics we will briefly survey the references. The first paper (1) by Franklin from 1881 reconsiders the convergence conditions of Newton's method. He writes: "The proof, as usually given, is somewhat tedious; the following proof is very brief. ." Then: "Serret, in his Cours d'Algebre Superior (Vol. 2, pp. 346-348), deduces by a long and somewhat difficult method the result. ," being testimony to the fact that after a proof has been given the issue is not closed but rather more mathematical effort is being invested in trying to simplify the proof for didactical reasons. Ref. (2) suggests using the reversion of series method, on which we comment later in the introduction. The paper (3) by Bateman is of a historical nature, relating the work of Halley to an earlier publication by the French mathematician Lagny. M. G. Pawley (4) obtains error expressions, using power series, for the approximations generated by Newton's method, and so do L. M. Weiner (14), W. Gander (20) and A. Melman (18), the latter with a comment on Euler's method. Newton's method is based on approximating a function by a tangent line, Frame (5) suggests the approximation of the function by a parabola. The danger with a parabola is that, unlike the line, it turns around and may fail to intersect the axis. Ref. (6) recalls the use of inverse functions, and Ref. 7 suggests the derivation of Halley's method by power series. We comment on Hamilton's (8) and Wolfe's (12) papers later in the introduction. Ref. (9) reconsiders Hamilton's method, while references (10), (21) and (24) are, again, of a historical nature. Ref. (11) derives Halley's method in a short way, and so does S. M. Venit (17); and it all comes to show the great interest in these methods and the effort directed towards their simplification. B. H. Brown (19) derives Halley's method from the fixed-point iteration with the specific weight function 1/√f', leaving it unclear how to generalize it. J. Gerlach (23) suggests the generalization of the th root, a derivation simplified in Ref. (25). Our method is also predicated on weight functions, but there is not need for them explicitly, a definite advantage. Reference (22) deals with the application of Newton's method to the computation of the natural logarithm. In section 7 we show how to do this by higher order methods. Ref. (26) discusses the convergence difficulties at points with vertical tangent line.

All these references are accessible to any undergraduate student who has taken a good calculus course, and are available on the internet. An interested student can study them in depth from the comfort of his home. The books (13, 16) are brought here for general reference.

What were the improvements proposed to these methods in the many years since Newton invented calculus? 1. Higher order, more efficient, methods were suggested for use on the digital computer. 2. Special variants of these methods were devised for equations of particular nature in order to take advantage of their special properties and structure to a hasten convergence. 3. The derivation of these higher order methods was systematized and simplified for better and shorter presentation in textbooks and classrooms. 4. The method was extended to functions of several variables. See for instance (16).

A simplified proof or an insightful explanation of a mathematical procedure appropriate for classroom presentation is of definite mathematical value.

What is our present contribution to the subject of iterative methods and what motivated us to undertake this study? A couple of years ago I (K.L.) took professor's Fried course on numerical analysis at BU at which we naturally discussed the numerical solution of nonlinear equations. A series of projects and paper readings led us to the belief that the derivation of the various techniques we were studying could be generalized and simplified for the benefit of both the student and the man of application. Subsequently I took several independent studies courses with professor Fried culminating in this paper.

We show here for the first time how sophisticated iterative procedures are naturally and easily derived from a simple, basic algorithm. Doing that we gain insight into the working of Newton's method. Specifically, we show that under some conditions the venerable method of Newton can be simplified (which we call the correct method,) a point that should be of interest to every student of Calculus as well as to every practical numerical analyst. We devise a novel simple procedure for the derivation of higher order iterative methods of any degree, a procedure that can be easily and appealingly presented to undergraduate students. Lastly, we show that these higher order methods are in some mathematical sense optimal.

How is our work compare to other published mathematical papers on the same subject? In our extensive literature search we have come upon two papers that come close to dealing with the same issues as ours. The first is the paper by J. M. Wolfe (13) who derives higher order iterative methods using an inverse function power series expansion suggested in (7), but his algorithm is not optimal in the same sense that our methods are. A rational approximation p/q to √N is said to be optimal if p2-Nq2. An iterative method is said to be optimal if, started with an optimal initial guess, it keeps generating optimal rational approximations. The other paper is by H. J. Hamilton (13). He also derives his higher order methods using the cumbersome inverse function power series expansion technique suggested in (7). Hamilton's methods include as a special case the classical second order method of Newton and the third order method of Halley, but his derivation of these iteration is by far more complicated and lengthy than the one so concisely presented here by us.

To understand why getting the higher order derivatives of the inverse function ϕ(x) to function is a cumbersome affair recall that by definition φ'(f'(x)) = x so that by the chain rule of differentiation:

φ'(f)f'(x) = 1 or φ"(f) = -f"(x)/(f'(x))3,

φ'''(f) = (3f''(x)2 f'(x)f'''(x))/f'(x)5,

φ''''(f) = (10f''(x)f'''(x) 15f''(x)3 f'(x)2f''''(x))/f'(x)7,

expressions that become quickly very complicated. Admittedly, getting these higher order derivatives is an interesting and useful exercise of calculus, but it is better to leave them out if possible.

Fixed-point iteration

A solution of an equation in the form x = f(x), where f(x) is some function of variable , is called a fixed point of f(x). For example, x = 7/2 is the sole fixed point of the function f(x) = 3x 7 since 7/2 = 3(7/2) - 7. In case the fixed point of a more general function is an irrational number, as is the case of, say,x = cos x one seeks to obtain acceptably good rational approximations to it.

Under some easily verifiable conditions on f(x)the sequence x0, x1, x2, . , xn generated by the recursionxn+1 = f(xn) started with a good initial guess x0, converges to a fixed point of functionf(x). This practical, utterly simple, procedure for the systematic generation of a string of ever improving rational approximations to a fixed point is the fixed-point iteration. We start consideration of the fixed-point iteration by looking first at the simple case of the linear function f(x) = k(x a) + a that has the unique fixed pointx = a for any value of constant k ≠ 1. For this function the fixed-point iterative method becomes xn+1 = k(xn - a) + a or |x n+1 - a| = |k| |(xn - a)| from which we conclude that if |k| < 1, then point xn+1 is closer to fixed point a than point xn, and consequently that convergence of xn to a takes place, independently of the starting point x0. We write en = xn a and have |e n+1 | = |k| |en|. Such convergence pattern is called linear. If |e n+1 | = |k| |en|2, then the convergence is termed quadratic, and if |e n+1 | = |k| |en|3, then convergence is said to be cubic. High order convergence means very fast convergence near the fixed point.

Convergence is also faster the smaller|k| is, and is instantaneous if |k| = 0. In the general nonlinear case, the global conditions for the linear f(x) translate into local conditions for a nonlinear f(x) near the fixed point. Considering the tangent line to f(x) instead of the function itself we expect convergence of the fixed point iteration to occur if the slope of the line is less than one in magnitude, namely if |f'(a)| < 1. Moreover, we expect higher order convergence to occur if f(x) is flat near the fixed point---if the function looks like a horizontal line in the vicinity of the fixed point, that is, if f'(a) = f''(a) = f'''(a) = . = f(m)(a) = 0 for some integer m. This is the essence of the fundamental fixed-point theorem.

Theorem. Let be a fixed point of the function f(x), a = f(a). Suppose f(x) to have a bounded derivative of order m+1 on an open interval containing the fixed point. If

f'(a)= f''(a) = f'''(a) = ... = f(m)(a) = 0 but f(m+1)(a) ≠ 0,

then the sequence x0, x1, x2, ., xn generated by xn+1 = f(xn) is such that |xn+1 - a| < c|xn - a|m for some constant c > 0, provided that x0 is close enough to a.

Proof. We shall prove the theorem for the specific case of m = 2. Let f'''(x) be bounded on the interval ™a δ, a + δ› for some δ > 0, and assume x ∈ I. Taylor's expansion of f(x) = 0 around point a is

f(x) = f(a) + (x-a)f'(a) + (1/2)(x-a)2f''(a) + (1/6)(x-a)3f'''(ζ)

where a < ζ < x if x is to the right of a. By the assumption that f(a) = a, f'(a) = f''(a) = 0, the Taylor expansion is reduced to f(x) - a = (1/6)(x a) 3 f′"(ξ) resulting in the inequality |f(x) - a| ≤ (M/6)|x a| 3 where M is an upper bound on in interval I. For f(x) the inequality becomes

|x1 - a| ≤ c|x0 a| 3, c = M/6

Now, if f(x) is chosen such that c|x0 a| < 1, then |x1 a| < |x0 a| implying that x1 ∈ I, and the method converges. End of proof.

In this paper we shall show how the fixed-point theorem may be used to systematically generate high order iterative algorithms of use in mathematical physics, engineering and economics.

The Newton-Raphson (NR) method

To keep matters simple we assume that the function f(x) is twice differentiable everywhere. Suppose number a exists such that f(a) = 0 but f'(a) ≠ 0. We seek to iteratively generate ever better approximations to number a. If constant A ≠ 0, then x = F(x), F(x) = x + Af(x) is equivalent to f(x) = 0, for which we propose the fixed-point iteration xn+1 = xn + Af(xn). To secure quadratic convergence we require that F'(a) = 0 and we determine that A = -1/f'(a) so that xn+1 = xn f(xn)/f'(a), which we call the correct Newton-Raphson method. Since a is unknown we do the next best thing and replace it by xn to have the classical NR method xn+1 = xn f(xn)/f'(xn). To prove the quadratic convergence of the classical NR method we show it to be a special case of the fixed-point iteration. Indeed, if F(x) = x f(x)/f'(x), then

F'(a) = 1 (f'(a)f'(a) f'(a)f''(a))/(f'(a)f'(a)) = 0

Since f(a) = 0 and f''(x) is bounded around a. For example, application of the classical NR method to f(x) = x2-1 yields the recursion xn+1 - 1 = (xn - 1)2/(2xn) that quadratically converges to a = x∞ = 1 for any x0 > 0. Feigning to know that f′(1) = 2 we obtain from the correct NR method the recursion xn+1 = 1 - (xn - 1)2/2 that also quadratically converges to one from any -1 < x0 < 3.

Yet it may happen that even if root a of f(x) = 0 is unknown still f'(a) is known via a differential equation for f(x). Consider using the NR method for computing [1] the natural logarithm. Here, for a given α, f(x) = ex - α, the root of which is , and f'(a) = α . The classical NR method for this function is xn+1 = xn - (eXn - α)/eXn, while the correct NR method is xn+1 = xn - (exn - α)/α. Practically, the two methods may not be far apart, but if f'(a) is known not applying the correct NR method would appear silly.

Halley's method.

The fixed-point iteration theorem is used here to derive a higher order method for the approximate solution of the nonlinear equation f(x) = 0. Let a be the (say unique) root of f(x), and let g(x) be a function twice differentiable in the neighborhood of a and such that g(a) ≠ 0. Then the fixed point a of F(x) = x + g(x)f(x) is a root of f(x). We shall chose now a weight function g(x) to have a cubically convergent fixed-point iteration xn+1 = F(xn), namely, we shall select function g(x) to assure that F'(a) = F''(a) = 0. We differentiate F(x) twice to have F(x) = 1 + gf' + g'f and F''(x) = gf'' + 2g'f' + g''f. At point a, f(a) = 0, and the zero conditions F'(a) = F''(a) = 0 become the pair of equations 1+ gf' + g'f = 0, gf'' + 2g'f' = 0, where f ,g and their derivatives are all evaluated at point a. In matrix-vector form

article_965_order_0

We replace g(a) by g(xn) and are led to the recursion

article_965_order_1

which is Halley's (of the comet fame) method (2).

To observe its cubic convergence we take f(x) = x2 1, for which f'(x) = 2x, f''(x) = 2, and readily ascertain that

article_965_order_2

nearly, if xn is nearly equal to a = 1.

To prove the cubic convergence of Halley's method we write it as xn+1 = F(xn+1) for F(x) = x + g(x)f(x) and g(x) = -2f'/(2f'2 ff'). We verify that if f(a) = 0 then g(a) = -1/ f(a) and g'(a) = f''(a)/(2f'2(a)). It follows that F'(a) = F''(a) = 0, and convergence is indeed cubic for f(x) satisfying the hypotheses of the fixed-point iteration theorem.

Higher order methods.

Emboldened by the success in procuring the quadratic NR method and the cubic method of Halley from the fixed-point iteration method we venture to create a fourth degree iterative method by enforcing the three conditions F'(a) = F''(a) = F'''(a) = 0. Differentiating F(x) = x + g(x)f(x) thrice we have

F'(x) =1 + gf' + g'f, F'' + 2g'f' + g''f, F'''(x) = gf''' + 3g'f'' + 3g''f' + g'''f.

Setting F'(a) = F''(a) = F'''(a) = 0 produces the linear system

article_965_order_3

in which f,g and their derivatives are all evaluated at point a. The solution of this system is

article_965_order_4

and our proposed, hopefully quartic, iterative method, becomes

article_965_order_5

implicit in a formula of Householder (3).

To observe the order of convergence of this method we take the function f(x) = x2 1 for which f'(x) = 2x, f''(x) = 2, and determine that

article_965_order_6

nearly, if xn ≈ 1.

To prove the general quartic convergence of this method we write it as xn+1 = F(xn) for and g(x) = -(6f'2 3ff'')/(6f'3 f ff'f'' + f2f'''). We verify (using Mathematica) that if f(a) = 0 then g(a) = -1/f(a), g(a) = f''/(2f'2), and g''(a) = -f''2/(2f'3) + f'''/(3f'2. It readily results that F'(a) = F''(a) = F'''(a) = 0, proving that convergence is indeed quartic for f(x) satisfying the hypotheses of the fixed-point iteration theorem.

The higher order iterative methods can be recursively derived from the lower ones. We write the NR method in the form

xn+1 = xn q0(xn)f(xn)/q1(xn), q0 = 1, q1 = f'.

Then the higher order, cubic method of Halley, is of the form

xn+1 = xn 2q1f/q2, q2 = 2f'q1 - f q1' = 2f'2 ff''

and the next higher order method is of the form

xn+1 = xn 3q2f/q3, q3 = 3f'q2 - f q2' = 6f'3 6ff'f'' + f2f'''

and so on.

Computation of square roots.

In this section we consider high-order iterative methods for the solution of f(x) = x[up]2 α = 0. To obtain a quartic method from the fixed-point iteration theorem we propose to write f(x) = 0 as x = x + g(x)f(x), or shortly x = F(x). We take g(x) = (Ax + Bx2 + Cx4)/x, and fix constants A, B, C so that F'(a) = F''(a) = F'''(a) = 0 at a = √α. The special choice of weight function g(x) is designed to assure the explicit dependence of A, B, C on α only. In fact

article_965_order_7

We shall say that the rational approximation p,q to √α is optimal if p,q satisfy Pell's equation p2 αq2 = ±1. Started from an optimal x0, the NR method, Halley's method, and our quartic method of the previous section, all produce an optimal x1. For example, starting with the optimal x0 = 3/2 as a rational approximation to √2 we obtain from the NR method the optimal x1 = 17/12, from Halley's method the optimal x1 = 99/70, and from our quartic method of section 5 the optimal x1 = 577/408.

Computation of natural logarithms.

To obtain a higher order fixed-point iteration method for the root a = lnα of f(x) = ex α = 0 we write it as x = F(x) with F(x) = x + g(x)f(x), g(x) = A + Bex + Ce2x and fix constants A, B, C so that . Simple algebra leads to

article_965_order_8

and the quartic method

article_965_order_9

that requires the computation of en = exp(xn in each iterative cycle only once.

Computation of the exponential function.

To iteratively locate the root of f(x) = lnx - α we propose the recursion

article_965_order_10

and, as we did before, find

article_965_order_11

which depend on the given value αonly.

Computation of inverse trigonometric functions.

Once a good program is available for the evaluation of sin x and cos x, 0 < x < π/2, the inverse trigonometric function arcsin x can be obtained as the solution of sinx - α = 0. For a cubic iterative solution method we propose the weight function g(x) = A + B cos x, and ascertain that F'(a) = F''(a) = 0, a = arcsinα, if A = (3α2 - 2) /(2β3 and B = -α/(2β3, whereβ = √(1 α2).

For a quartic method we suggest the weight function

g(x) = (A + Bcosx)/(1 + Ccosx)

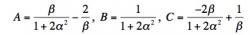

and verify that F'(a) = F''(a) = F'''(a) = 0 if

article_965_order_12

where β = √(1 α2).

Conclusions.

We have shown in this note how to systematically generate, high order, quickly converging, iterative methods, of any desired degree, for the solution of the single-unknown nonlinear equation f(x) = 0. Fast converging methods like those should be of great use in large-scale trajectory tracking algorithms that require the repetitive solution of a nonlinear equation many times over long time periods (as is the case in computational Astronomy) and where an efficient solution algorithm is imperative to avoid overtime computations.

We have dealt here exclusively with one unknown and functions that are highly differentiable. A common source of nonlinear equations is the search for the minimum points of a differentiable function, points where its derivative function vanishes. But functions that lack a derivative at their minima are also common in Min-Max problems and deserve attention. The solution of systems of nonlinear equations also deserves consideration, and will be studied next.

References

1. F Franklin, On Newton's method of approximation, American Journal of Mathematics, 4(1881), 275-276.

2. S. A. Corey, A method of solving numerical equations, The American Mathematical Monthly, 21(1914), 290-292.

3. H. Bateman, Halley's methods of solving equations, Amer. The American Mathematical Monthly, 45(1938), 11-17.

4. M. G. Pawley, New Criteria for Accuracy in Approximating Real Roots by the Newton-Raphson Method, National Mathematics Magazine, 15(1940), 111-120.

5. J. S. Frame, A variation of Newton's method, The American Mathematical Monthly, 51(1944), 36-38.

6. J. B. Reynolds, Reversion of power series with applications, The American Mathematical Monthly, 51(1944), 578-580.

7. H. S. Wall, A modification of Newton's method, The American Mathematical Monthly, 55(1948), 90-94.

8. H. J. Hamilton, A type of variation on Newton's method, The American Mathematical Monthly, 57(1950), 517-522.

9. J. K. Stewart, Another variation of Newton's method, The American Mathematical Monthly, 58(1951), 331-334.

10. T. R. Scavo and J. B. Thoo, On the Geometry of Halley's Method, The American Mathematical Monthly, 102(1955), 417-426.

11. R.W. Snyder, One more correction formula, The American Mathematical Monthly, 62(1955), 722-725.

12. J. M. Wolfe, A determinant formula for higher order approximation of roots, Mathematics Magazine, 31(1958), 197-199.

13. J. F. Traub, Iterative Methods for the solution of Equations, Prentice Hall, Englewood Cliffs, N.J., 1964.

14. L. M. Weiner, Note on Newton's method, Mathematics Magazine, 39(1966), 143-145.

15. J. Greenstadt, On the efficiencies of gradient methods, Mathematics of Computation, 21(1967), 360-367.

16. A. S. Householder, The numerical treatment of a single nonlinear equation, McGraw-Hill, New-York 1970.

17. S. M. Venit, Remarks concerning the delta method for approximating roots, The Two-Year College Mathematics Journal, 7(1976), 1-3.

18. A. Melman, Geometry and convergence of Euler's and Halley's methods, SIAM Review 39(1977), 728-735.

19. B. H. Brown, Jr., On Halley's Variation of Newton's Methods, The American Mathematical Monthly, 84(1977), 726-728.

20. W. Gander, On Halley's iteration method, The American Mathematical Monthly, 92(1985), 131-134.

21. D. F. Bailey A historical survey of solution by functional iteration, Mathematics Magazine, 62(1989), 155-166.

22. 1. J. F. Epperson, On the use of iteration methods for approximating the natural logarithm, The American Mathematical Monthly, 96(1989), 831-835.

23. J. Gerlach, Accelerated convergence of Newton's method, SIAM Review, 36(1994), 272-276.

24. Tjalling J. Ypman, Historical Development of the Newton-Raphson Method, Siam Review, Vol 37, No 4(1995), 531-551.

25. W. F. Ford and J. A. Pennline, Accelerated convergence of Newton's method, SIAM Review, 38(1996), 658-659.

26. S. H. Hetzler, A continuous version of Newton's method, The College Mathematics Journal, 28(1997), 348-351